Jobs-to-be-Done (JTBD) has really been getting popular. Yet so few enterprises have actually been able to develop a rich corporate culture that is based on it. In part, it’s because there are a number of different flavors of the concept, which tends to cause more confusion than clarity. What I believe is the most actionable method (Outcome-Driven Innovation or variations on it) has so much rigor that - while it works well - it generally prohibits enterprise-wide adoption (or even product management adoption).

At the end of the day, only a portion of the enterprise is involved in product planning and development. The rest is trying to get other stuff done. JTBD techniques therefore need to be adapted for other problem-solving challenges if we’re to see broader adoption

Enterprises are complicated, which is why you see so many consulting firms introduce simple-looking frameworks for transformation. Anything more would simply be rejected...even if the simple ones don’t really work. I’ve watched companies embrace simplicity over-and-over. While it’s often at the expense of long-term success, simpler methods commonly get used.

So, if you have a more complicated method that doesn’t get used, maybe it can be simplified while striking a balance between consumption and effectiveness. I think it’s really that simple. One way to do that is to present the method in a form that most business professionals are familiar with...a capability model.

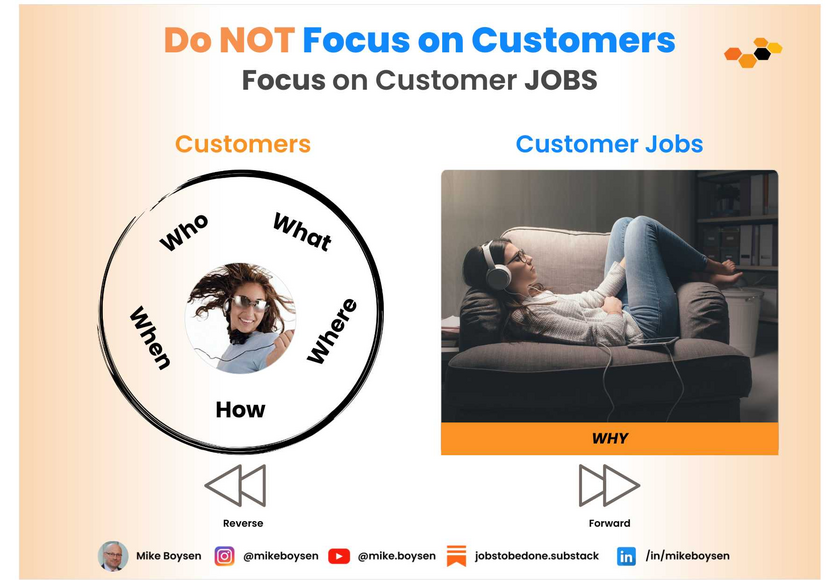

While not everyone will agree with me, capability models are very similar to “jobs-to-be-done” models. In the world of JTBD, practitioners are typically trying to do one of two things:

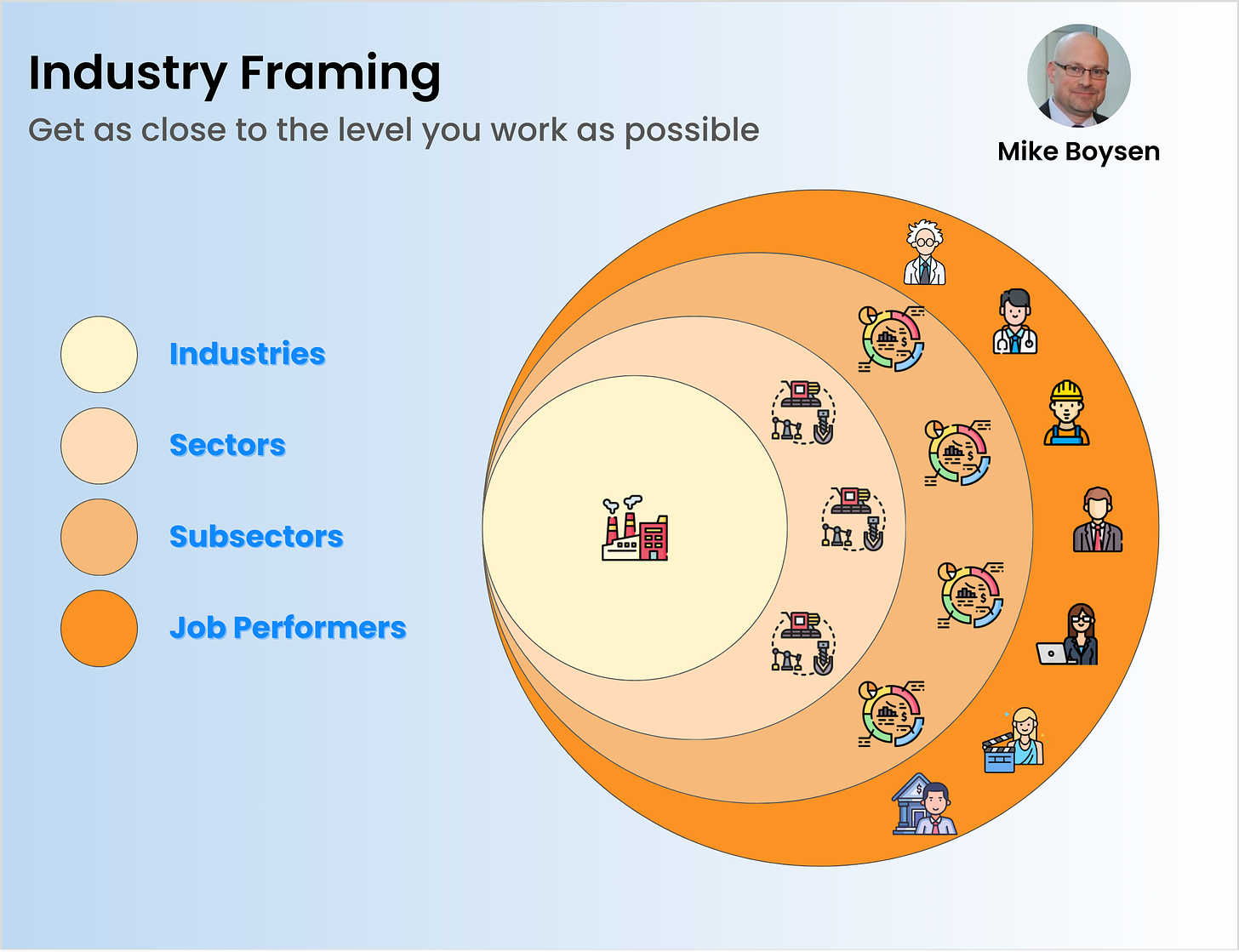

Identify a [solution-agnostic] market at a level that is actionable - a group of people trying to reach a common objective. The goal is to seek gaps in this view of the market to exploit with new products or services. In an enterprise sense, that group could be a marketing team trying to develop a qualified lead and need a better tool/platform

Identify a brand-/channel-agnostic journey where all of the people are trying to obtain a service for a common solution. The goal here is to seek gaps in how services are offered, sometimes leading to disruptive innovation in the horizontal space. This could also be an enterprise marketing team who is seeking partners / services to reach their objectives

Capability models often address specific functional areas closely aligned to the solution space - how we do things today. However, there are also some models that do it differently, and take a look more closely at the underlying objective rather than how it’s done today in a specific way.

Creating job maps and performance metrics is fairly straight forward once you get the hang of it and for the most part do a fine job of eliminating bias. My goal has been to simplify that process and provide better guard rails. Since we can learn how to create job maps fairly quickly, it might make more sense for JTBD practitioners to transform their maps and metrics into the more familiar capability format. I cover that process on this blog and also wrote this more comprehensive piece while working at Strategyn…

The push-back I predict is that the focus is somewhat less solutiony than many capability-maturity models floating around, but if we’re looking for a true capability understanding of the current state, and a way to map it to a future state, the book ends need to match in form - both ends need to be about objectives, not solutions. We can quickly get to the solutiony stuff right after we agree on what we want to accomplish, and understand our true capabilities. So, let’s take a look at how we might use an existing JTBD model to create a capability map.

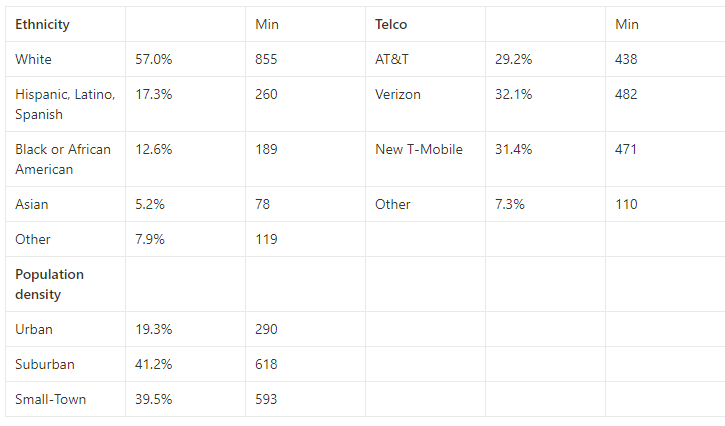

Since we’re assessing a functional group within an enterprise and not trying to develop a product for the general market, we don’t need to survey the general population. Getting internal feedback from key stakeholders is really all we need to focus on for this exercise. We’re essentially re-purposing a jobs-to-be-done framework/model for use in more common internal assessments.

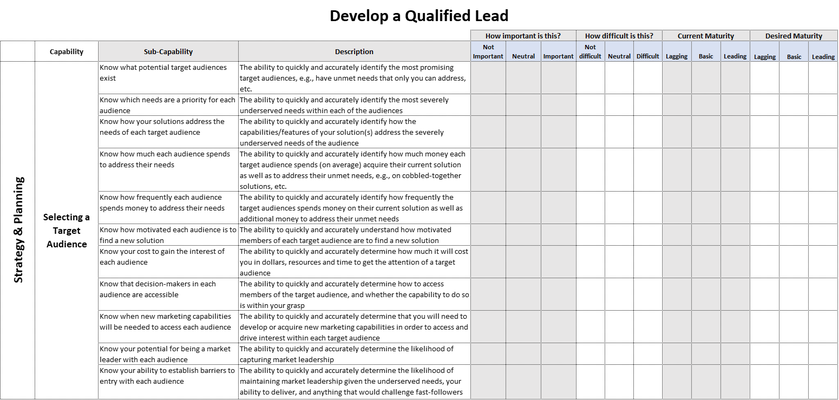

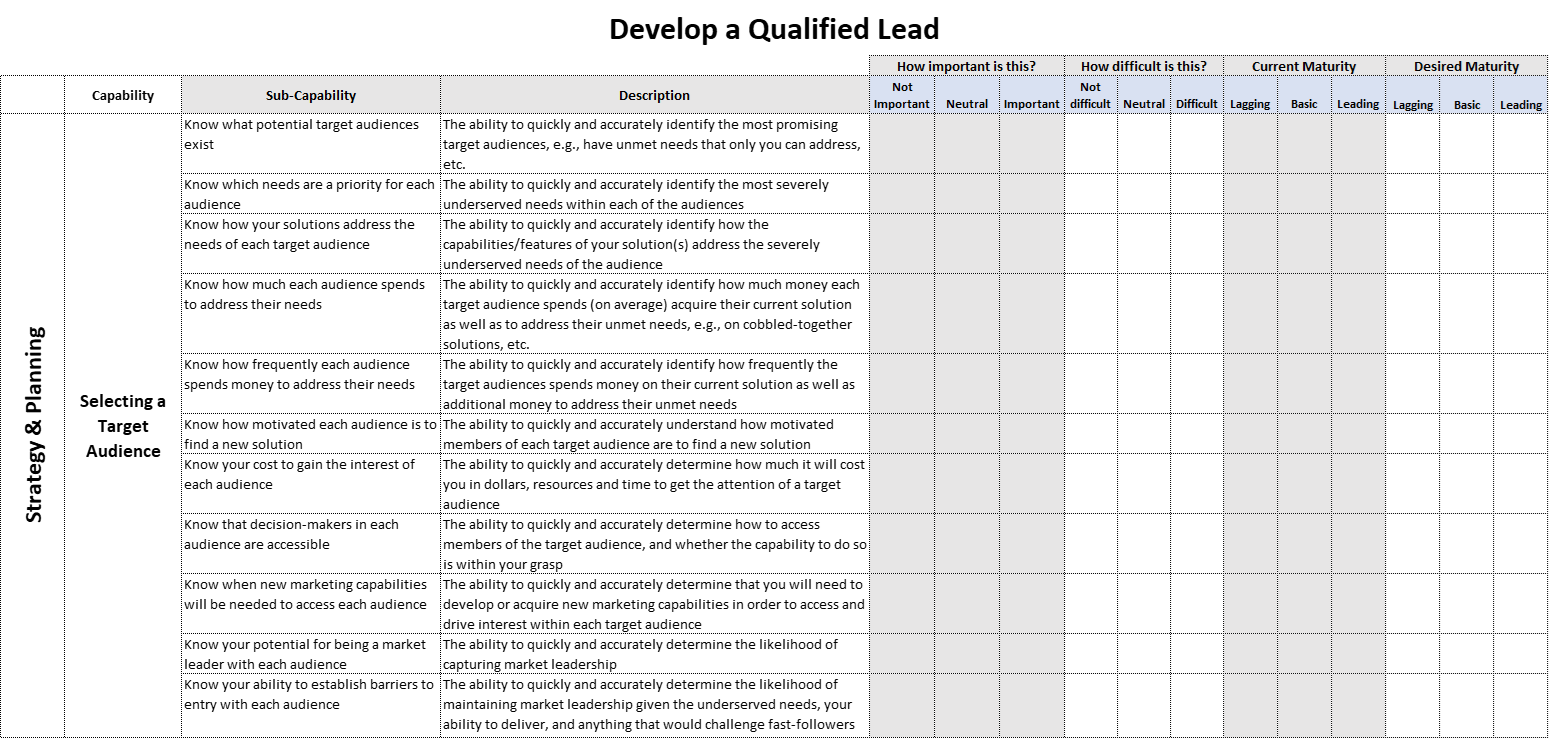

For example, if we’re evaluating a marketing organization’s ability to develop a qualified lead, the stakeholders that have responsibility across the value chain are the focus of our inputs. They’re essentially the doers, or the end-users, who are accountable. If any capability model works, then this one will certainly work.

It just serves a slightly different purpose than the ones that are designed to push you toward a technology purchase before you know the things your technology investment needs to enable.

This 👆 will be an eye-opener as you begin talking to vendors. This is not a feature-oriented capability model, and that’s all vendors want to talk about.

Three Stages

Everyone frames their models differently and I will take a little bit from each of the ones I’ve seen and add elements where necessary. It’s still going to be simple.

Strategy & Planning - these are the capabilities you must excel at before you execute toward your objective. Think of it as getting ready and aiming. These are the things that happen before you execute the job.

Execution & Operation - These are the capabilities that put your plan into action. This is what I like to call theater. These are the things that happen while executing the job

Reporting & Analysis - These are the capabilities that help you monitor your operation and also analyze its performance after-the-fact. These are the things that happen after executing the job.

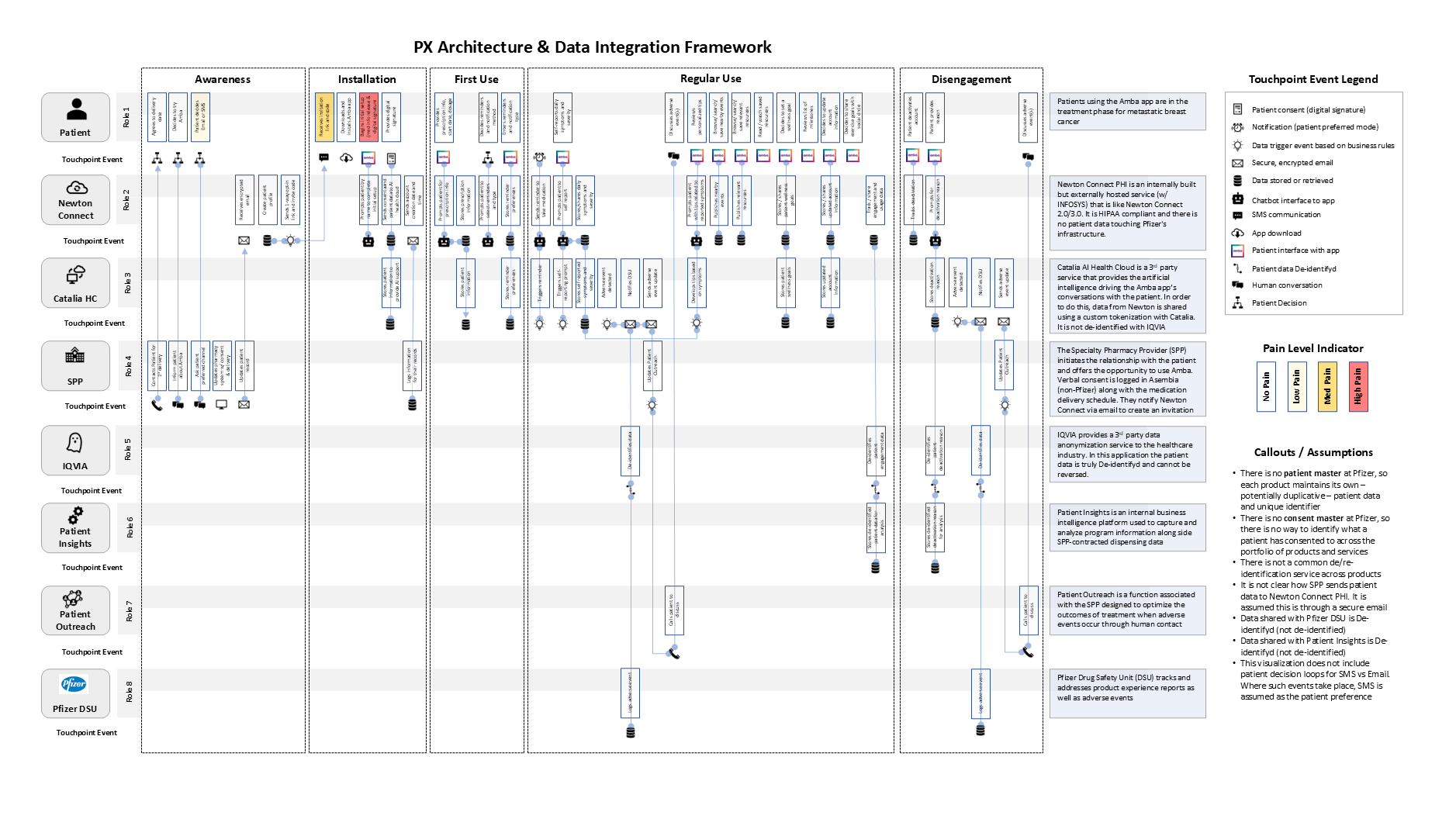

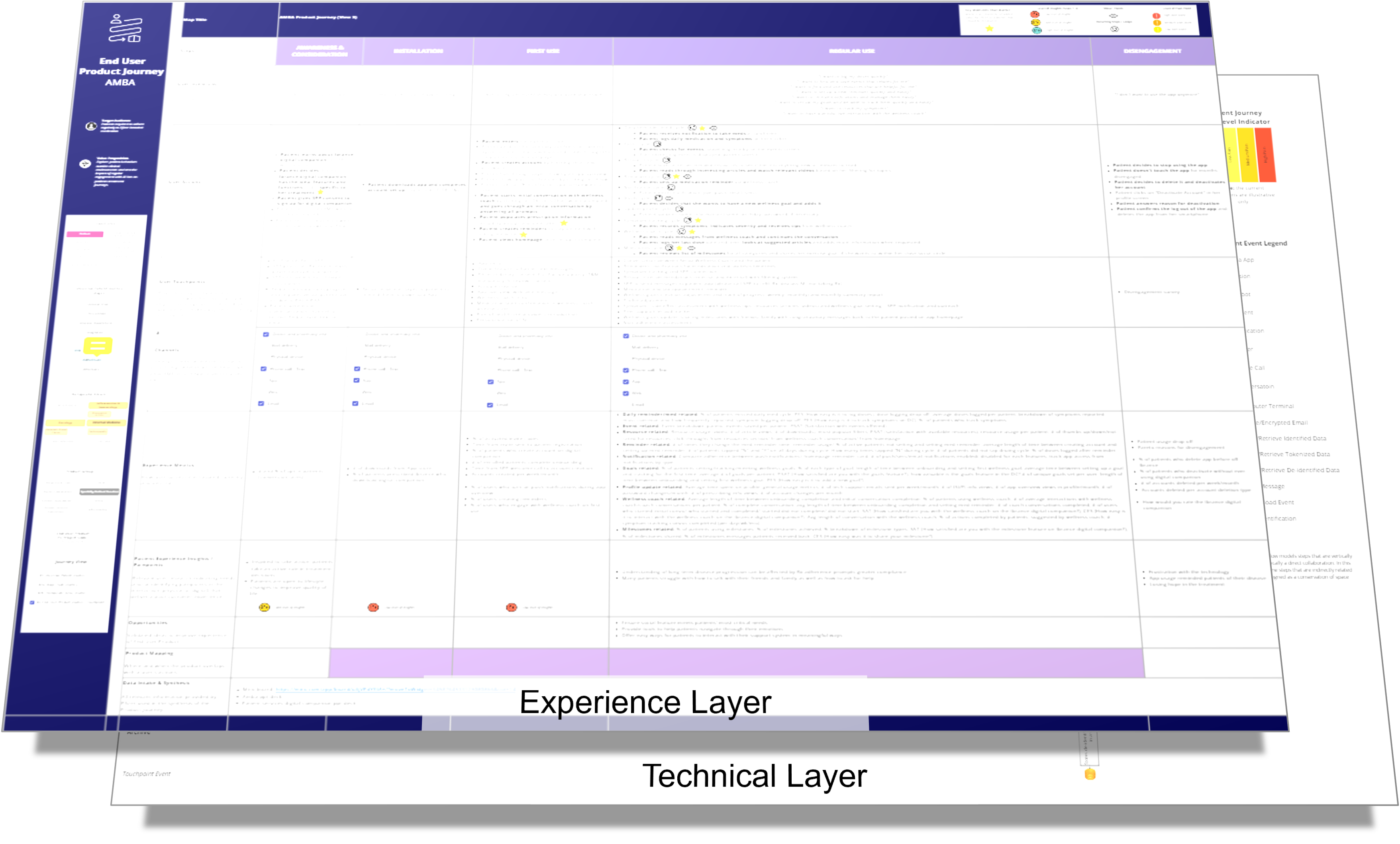

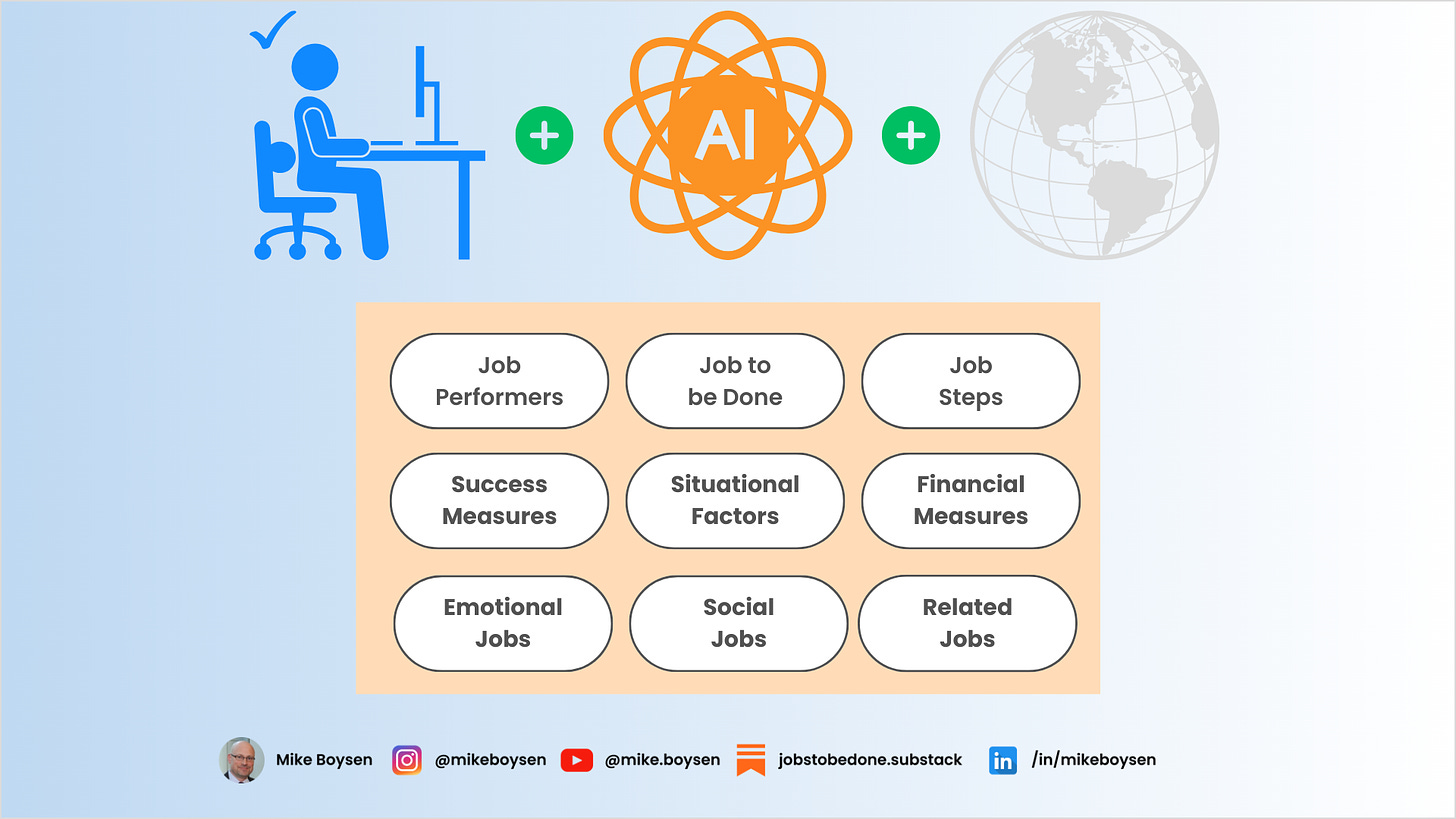

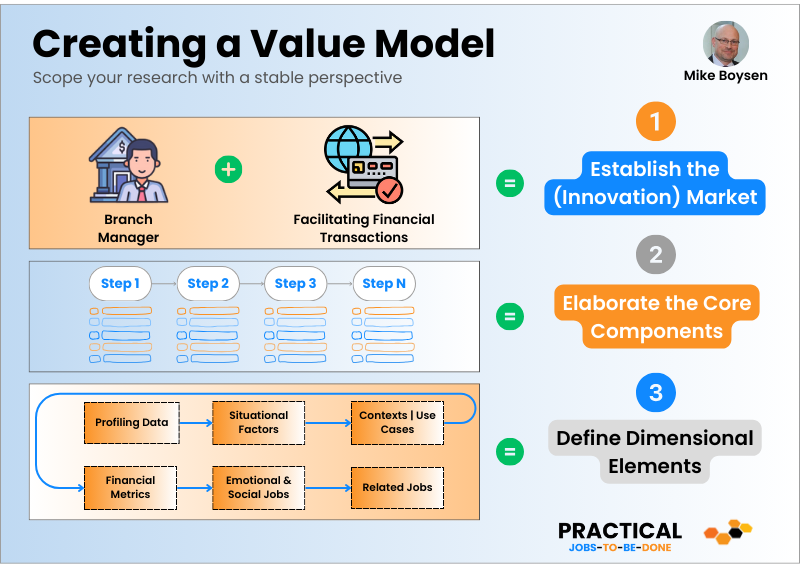

This breakdown works very well with the concept of job mapping which comes from Strategyn’s Outcome-Driven Innovation methodology. As you will see below, I’ve taken the first step from the model I constructed for you in another post…

In my approach, what was a job step becomes a Level 1 capability. Each performance metric (aka modified desired outcome) becomes a Level 2 capability (or sub-capability). We essentially have a stage, a capability, a sub-capability, and a description (reads like a requirement, only without being prescriptive).

I realize that this will set alarm bells off with most technology consultants. Trust me, I do. However, this model should help us make sure we are not acquiring technology that we don’t need (already have a good solution/capability), acquiring technology we don’t know how to use effectively, or acquiring technology that can’t help us accomplish our goals. At the end of the day, we should be seeking solutions that enable our needs and desired capabilities. Technology that is not focused on these things is simply an unfortunate expense.

You can ask vendors to make their feature matrix to your level 1 or 2 capabilities to find fluff that doesn’t really map to anything important :)

Since I’m not stepping into the solution space (yet), the concept of creating maturity language didn’t seem appropriate for this type of assessment. Therefore, I decided to keep it really simple and as unbiased as I possible. At this point almost any such language would lead stakeholders to a technology conclusion, instead of to a capability awareness.

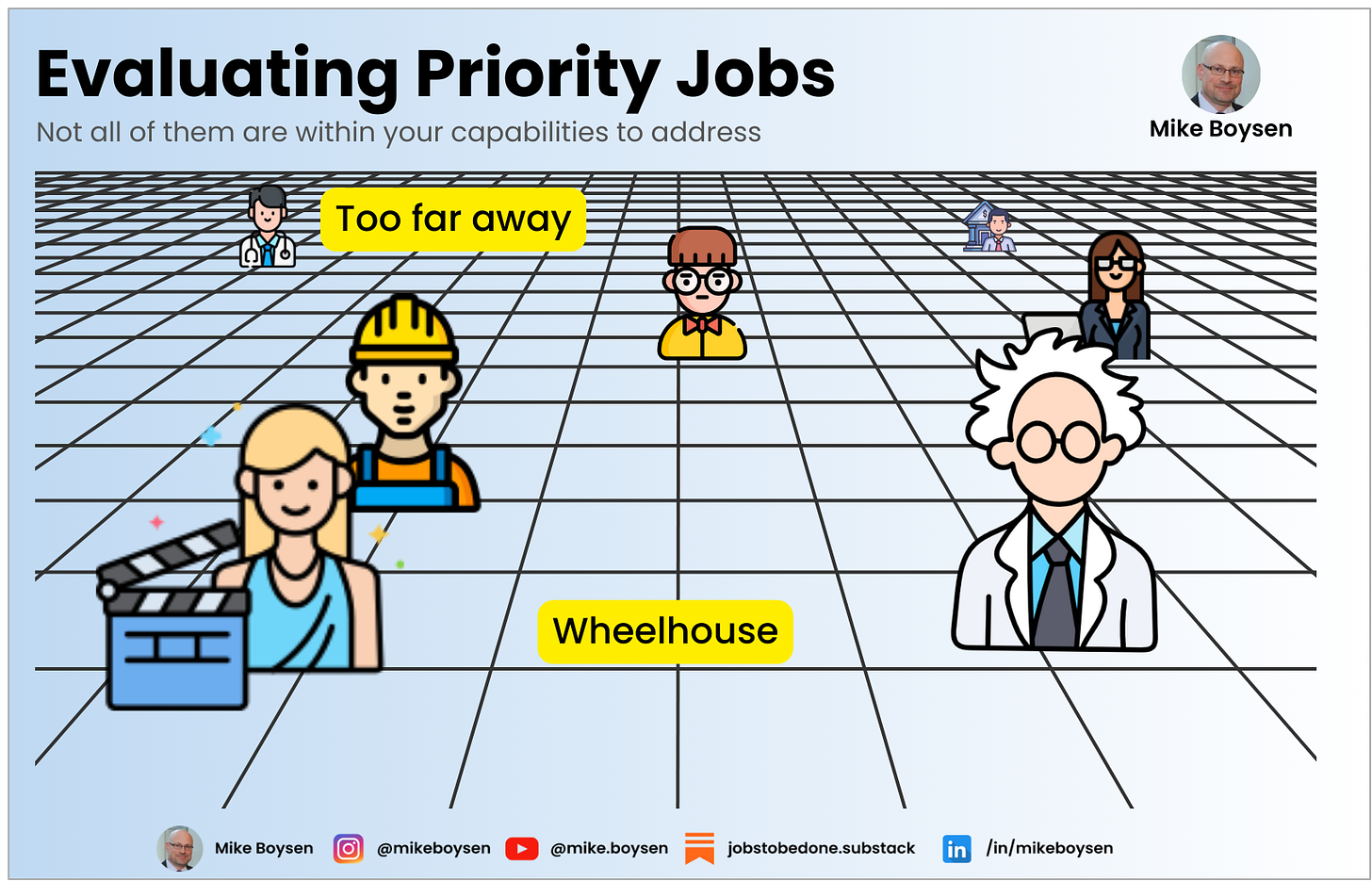

An assessment based on jobs theory should be about extreme capability awareness and targeting. It should also align to a discrete problem/objective described as a single job-to-be-done. 👇

I’m demonstrating one out of the 15 steps in the model I linked to above. The complete capability maturity model will have closer to 100 sub-capabilities. So, when you begin moving into the requirements definition phase you will have real end user/stakeholder needs to trace your requirements to! Lots of them.

There are two sides to the coin.

Vendors can learn how to use a jobs lens to identify what customers need (traditional use), or

Customers can better articulate what they need and inform the vendors (suggested use)

At a minimum, corporate customers will have a better bargaining chip when it comes to negotiating away costs that over- or mis-serve them with the products that are being pitched to them. Why pay for features you don’t want or need?

First, you need to have a job map and performance metrics. If you don’t, I cover that in other posts. 😆

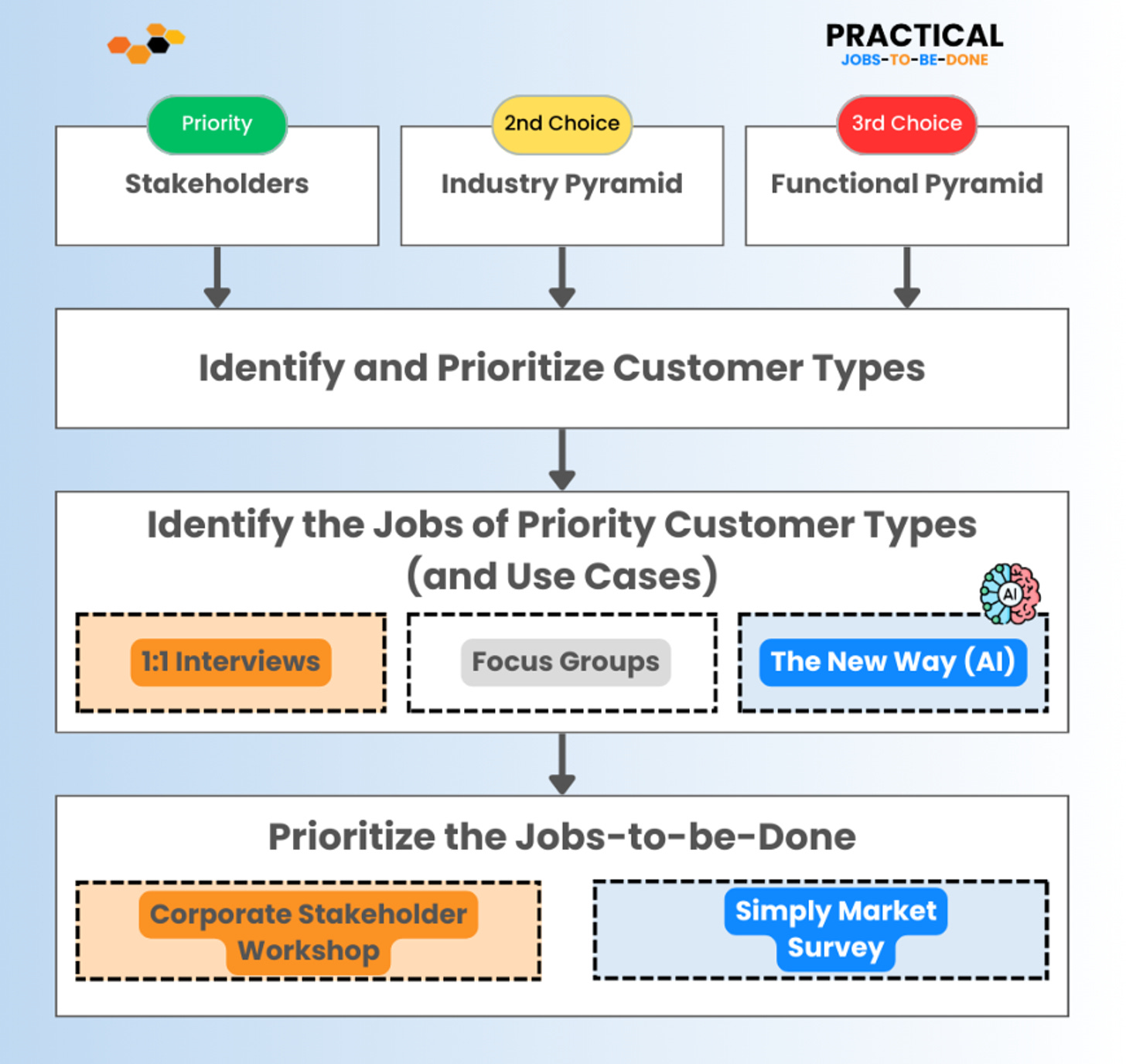

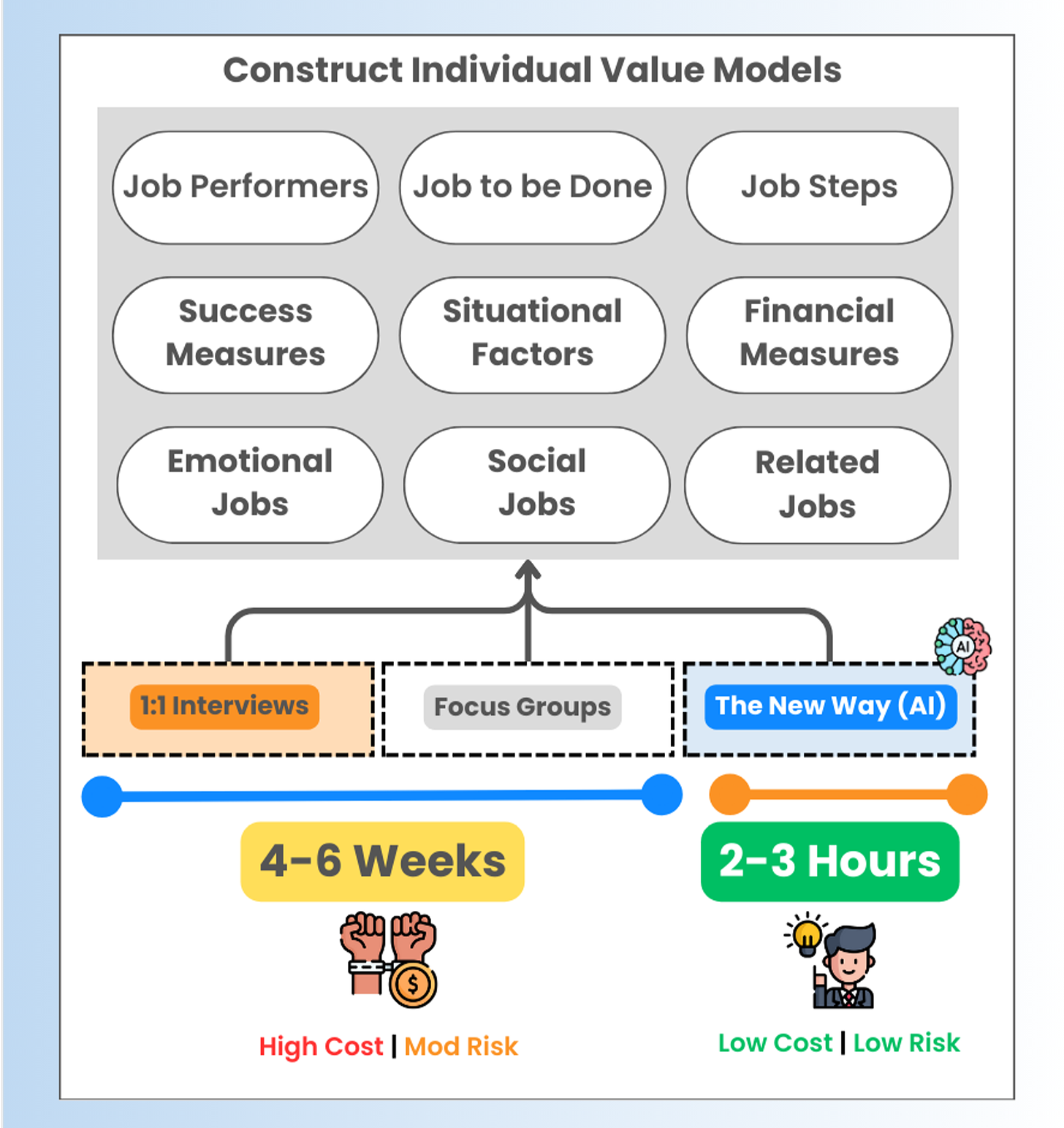

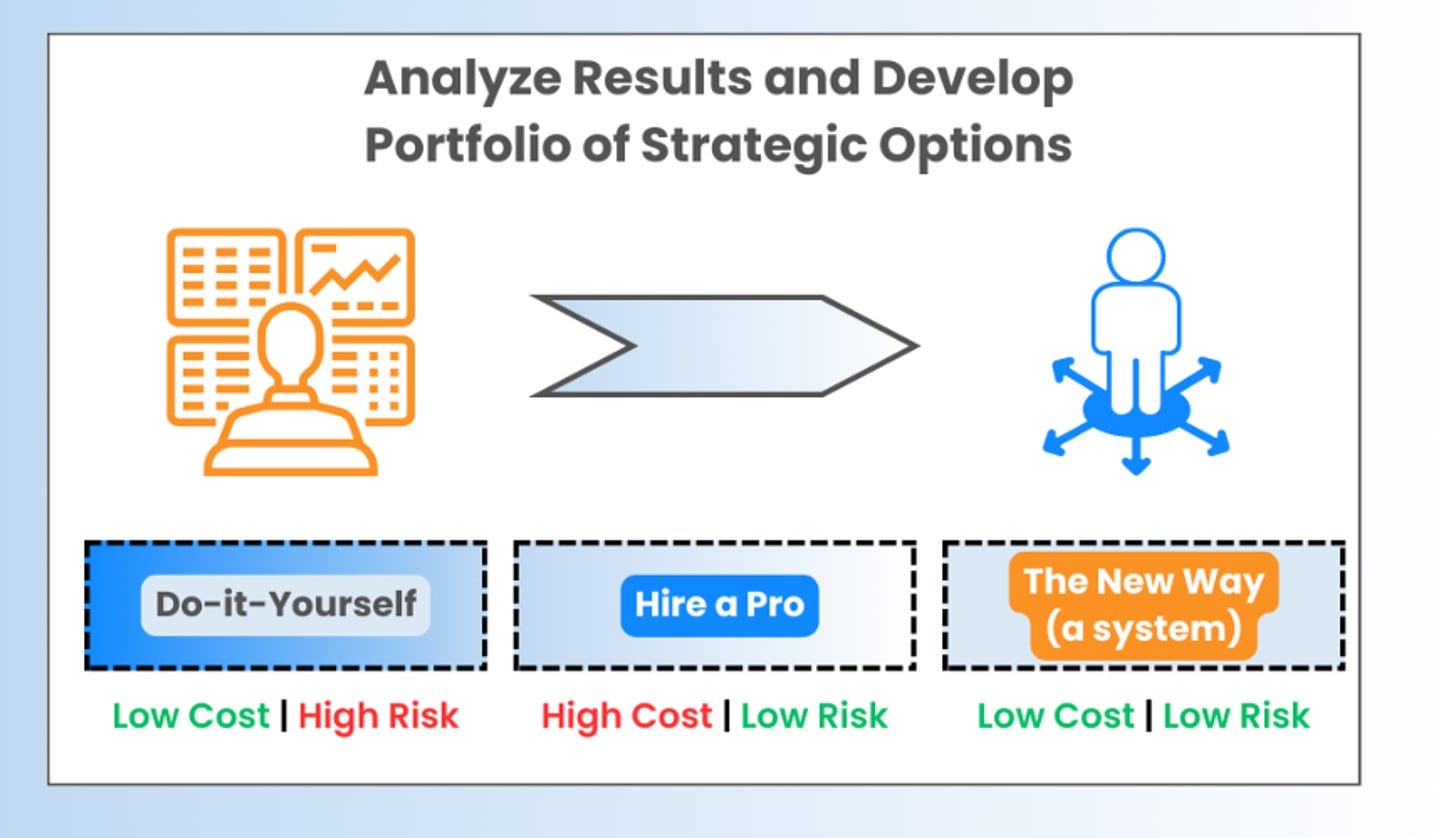

You could approach your assessment in a number of ways. One way would be to develop questions for each of these areas that help you to evaluate each sub-capability and conduct 1:1 interviews with each stakeholder. Then it would be up to you to determine how important it is, how difficult it might be to achieve, and what the current level of maturity is. I would leave the desired level for a future-state workshop with all of the stakeholders, and at this point you’d want to confirm everything, define what actions could be taken for each capability or sub-capability, how those actions could be leveraged across capabilities, and how difficult those initiatives would be.

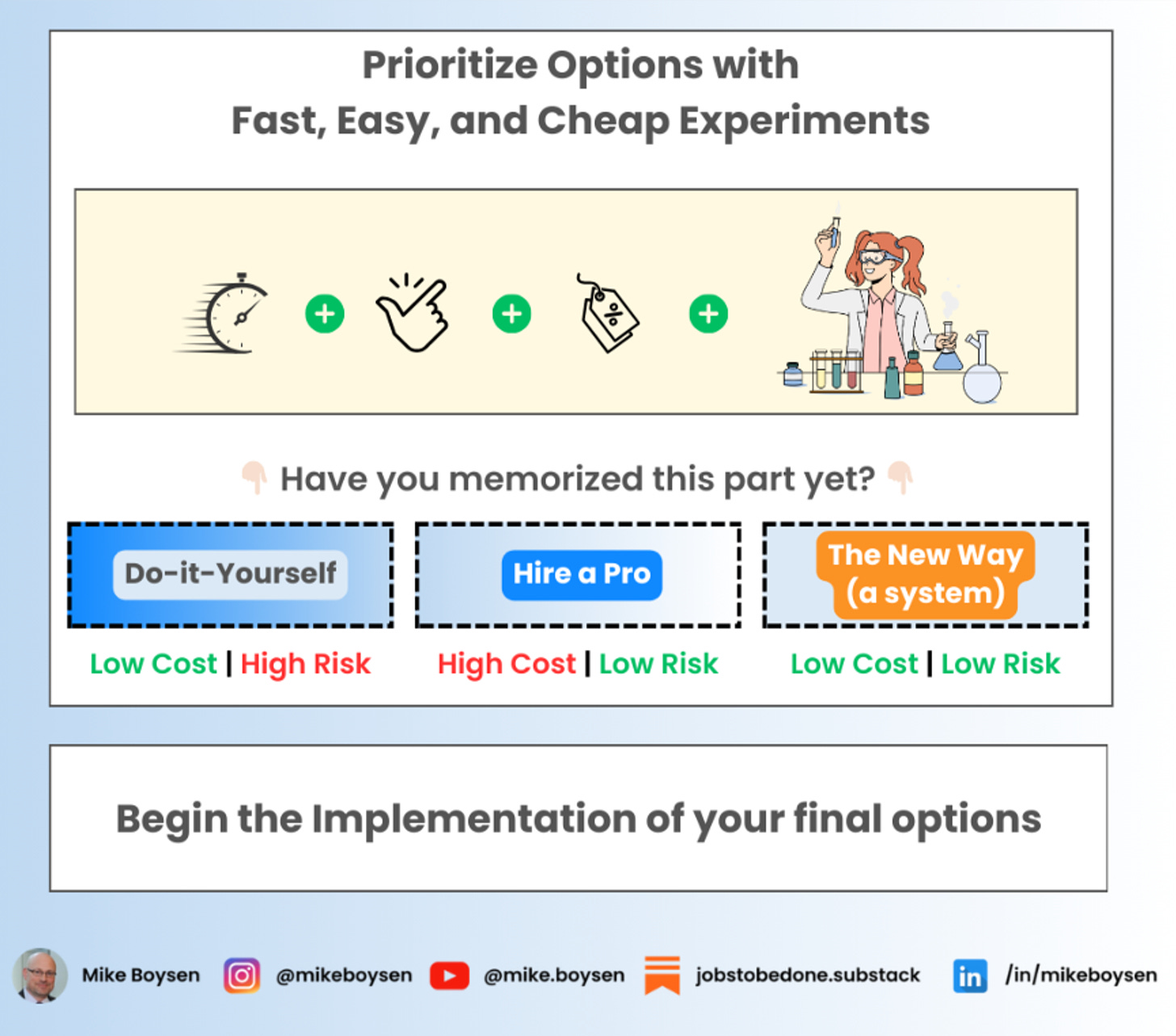

Another way would be to have each stakeholder assess each capability, or only those relevant to them, conduct a 1:1 interview with each stakeholder to review their assessment. You could then also develop future-state workshops around each Level 1 capability to develop hypotheses and experiments (cheap, easy, quick) to test your ideas for closing gaps.

I’ll leave that up to you. But I’d like to discuss the rating criteria I’m using above because it’s one of the adaptations I’ve made

How important is this?

I’m recommending a 3-point scale with a neutral option in the middle. This is just far easier for people to get their minds around and the level of fidelity is good enough. Anything more and people start getting into critical analysis of potential bias in the results. I recommend simplicity.

Not at all important

Neutral

Important

How difficult is this?

Are teams struggling to accomplish this today, or are the options available so difficult they don’t even try to achieve the sub-capability? While we could come up with a longer list of inputs into difficulty, for this exercise using a 3-point scale like we are on the importance scale is good enough. We have an option on each end of the spectrum, and a neutral option.

Not at all difficult

Neutral

Difficult

Current level of maturity

Are you seeing a pattern yet? I’m going to recommend that you simply use a 3-point scale to assess the maturity…

Lagging - the team knows that they are behind either a standard or their competitors

Basic - the team agrees that they are working on evolving this capability but they have not met or exceeded a standard or the competition yet

Leading - the team agrees that they are setting new standards and are ahead of the competition

If you want a higher level of fidelity, I grant you permission to so! Most of the scales are made up and quite subjective, so I prefer to remain a simpleton.

This is also where we want to capture any evidence to support this assessment.

Desired level of maturity

And finally, I continue with my 3-point theme. Using the same scale as the current maturity level, the team agrees on where they need to be within a reasonable timeframe, and the actions necessary to get there. While I’m suggesting we stick with the jobs-to-be-done theme of measuring importance, you may also want to capture elements that describe the commercial impact of making the improvement, as well as the cost, speed, and effort level to close the gap. These are not the same as the difficulty question…which is a current state assessment of achieving the objective today.

In closing

For those of you who may not be in product development but are obsessed with jobs-to-be-done and how you can use it, this might be one option. Just imagine what it would be like to develop a Request for Information (RFI) and ask vendors how they will help you achieve these types of capabilities. Been there, done that! LOL

I’m curious to hear your thoughts. I know there are many product development people out there that will scratch their heads at this. You get plenty of love in other posts. But any of you more general consultants will probably get where I’m going with this.

Did I nail it? Do I need to change something? Is there something there that adversely affect the outcome of an assessment? Let me know below…

Accelerators

The Universal JTBD Performance Canvas - by Mike Boysen (substack.com)