I've noticed something from working with people involved in journey management, journey mapping, and journey analysis. They embrace superficial results for some reason. Perhaps it's one of the following reasons:

Not authorized to dig too deep

Not skilled enough to dig deep enough

Not enough time allocated to the exercise

Not enough of their team budget got spent this year so it's at risk of being reduced next year. Let's use it!

Maybe you have some other observations. Let me know in the comments. Until then, here are some tips I'd like to share based on my observations

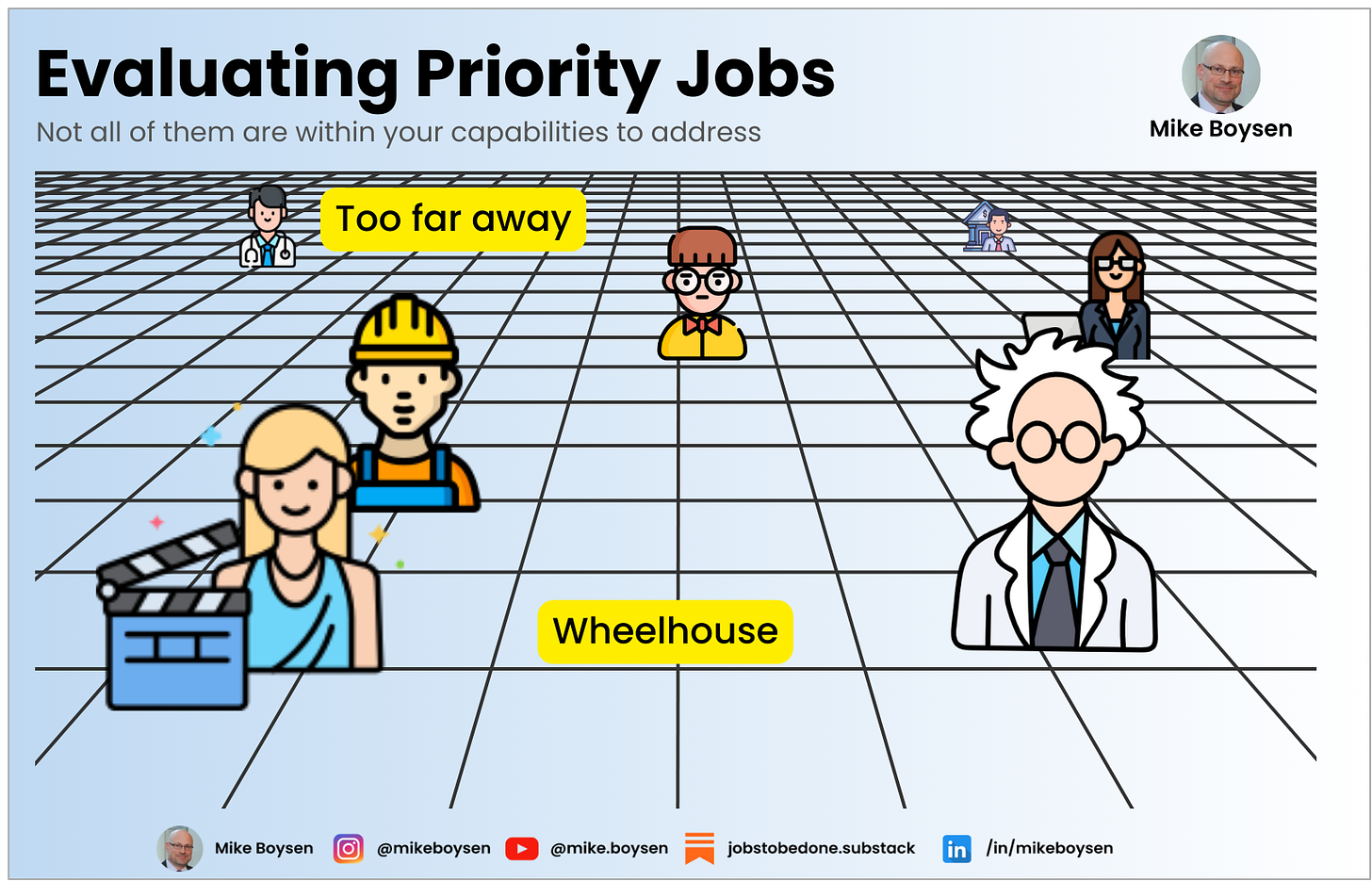

Make sure you can take action

If you're asking end users to rate success, make sure that you would know what you could do for every single metric you ask them to rate. Don't ask questions you can't - or your organization won't - take action on.

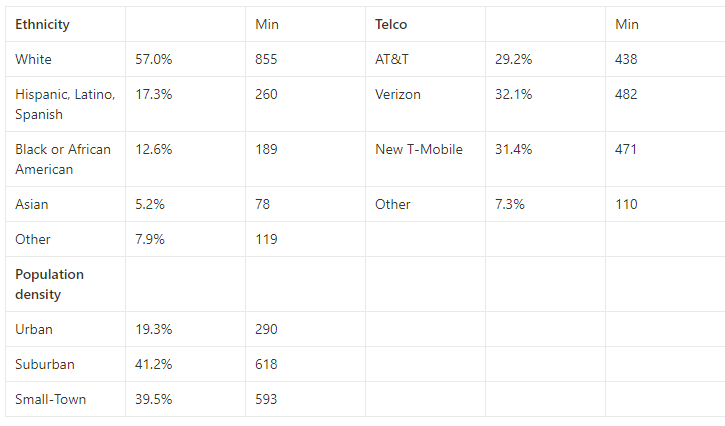

Here's an example. Suppose you're a wireless Telco that wants to optimize the journey their prospects sometimes take when they decide to switch from another carrier and consider your brand. In this day and age, consumers have a lot of product options, but they also have a lot of channel options.

Let's say you heard that growth and better experiences come from raising the level of problem-solving abstraction. That means that you can't just study physical store experiences. You need to consider all channels, and the digital era offers more than one of those.

The problem is that your leadership believes in stores. They believe that building stores and infrastructure in rural areas is the pathway to growth. So, while studying a decision journey and a purchase journey across channels seems like a great idea, the insights generated will not be acted upon.

Yea, that's an extreme example, right? 🤫 You thought I'd be talking about questions whose countermeasure would involve some material science thing that didn't exist yet, right. That too!

While this might be frustrating, the benefit of leaving things out is that you have more room to go deep and broad in areas that make everyone comfortable. Don't worry, you will develop insights that invalidate their hypotheses anyway. 😉

Maybe then they'll unleash you into the world of real insights and innovation.

Surveys should not be qualitative

Spend your qualitative research time building a purposeful question framework in order to collect the data you need in a way that can identify value gaps through prioritization. Anything short of this is just theater for individual performers.

Open-ended questions at scale simply amplify what a pain-in-the-ass it is to synthesize unstructured and unmanaged inputs. If you take garbage in, you get synthesis bias on the way out. Maybe AI will save you.

I include hypothetical questions in this category as well. They seem structured and quantitative, but participants are prioritizing something they've never done. So, they are in an imaginary playland at your expense.

What strategic decisions are you supposed to make with this kind of information?

Don't use fancy formulas

It's easy to be fooled by fakery. But who's fooling who? When you're collecting ordinal data, use ordinal models to analyze the data.

You've probably been wowed by metric models that use fancy tools like top-two-box and aggregation before doing any calculations. I'm not saying this is smoke and mirrors ... just loss of signal.

Why not calculate things at the lowest point in the data...the respondent? You can always average up.

The reason to take my suggestion is that you don't lose information in your ranking approach. You keep all of the data and the relationships all the way up the chain.

If you have two 5-point scales and only take two data points from each and then aggregate each scale, you've lost the relationship information between those scales before you've even run it through your magical algorithm.

Is it possible that there could be missed opportunities? How about false positives? Is this what you're looking for?

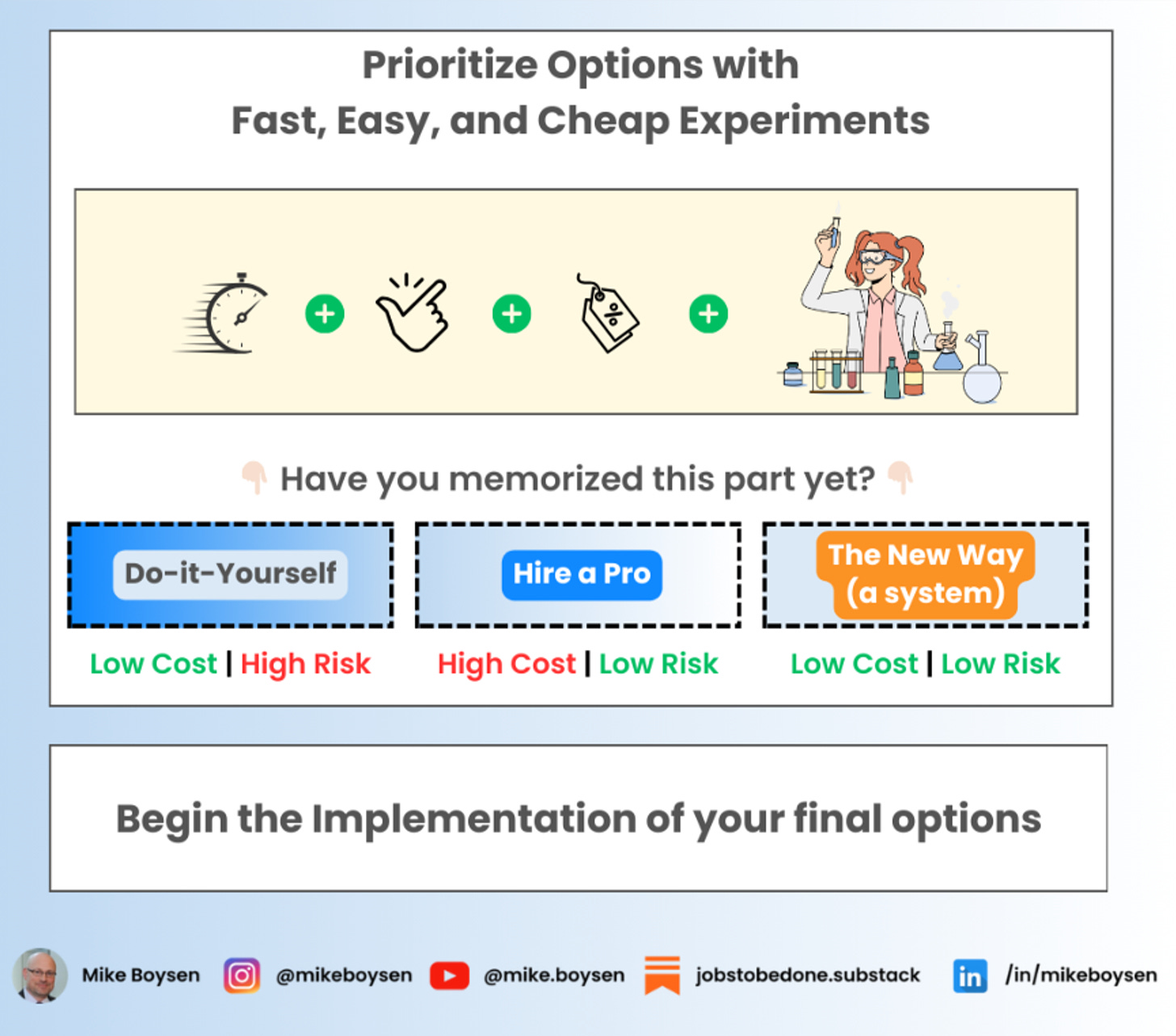

It's unlikely an experienced data scientist would recommend that approach. But, apparently there are some that do. Just use simple ranking tools as they will tell you want you need to know regardless of how you slice or dice your data.

And, you'll be able to explain it to your stakeholder.

Don't be afraid to capture detail

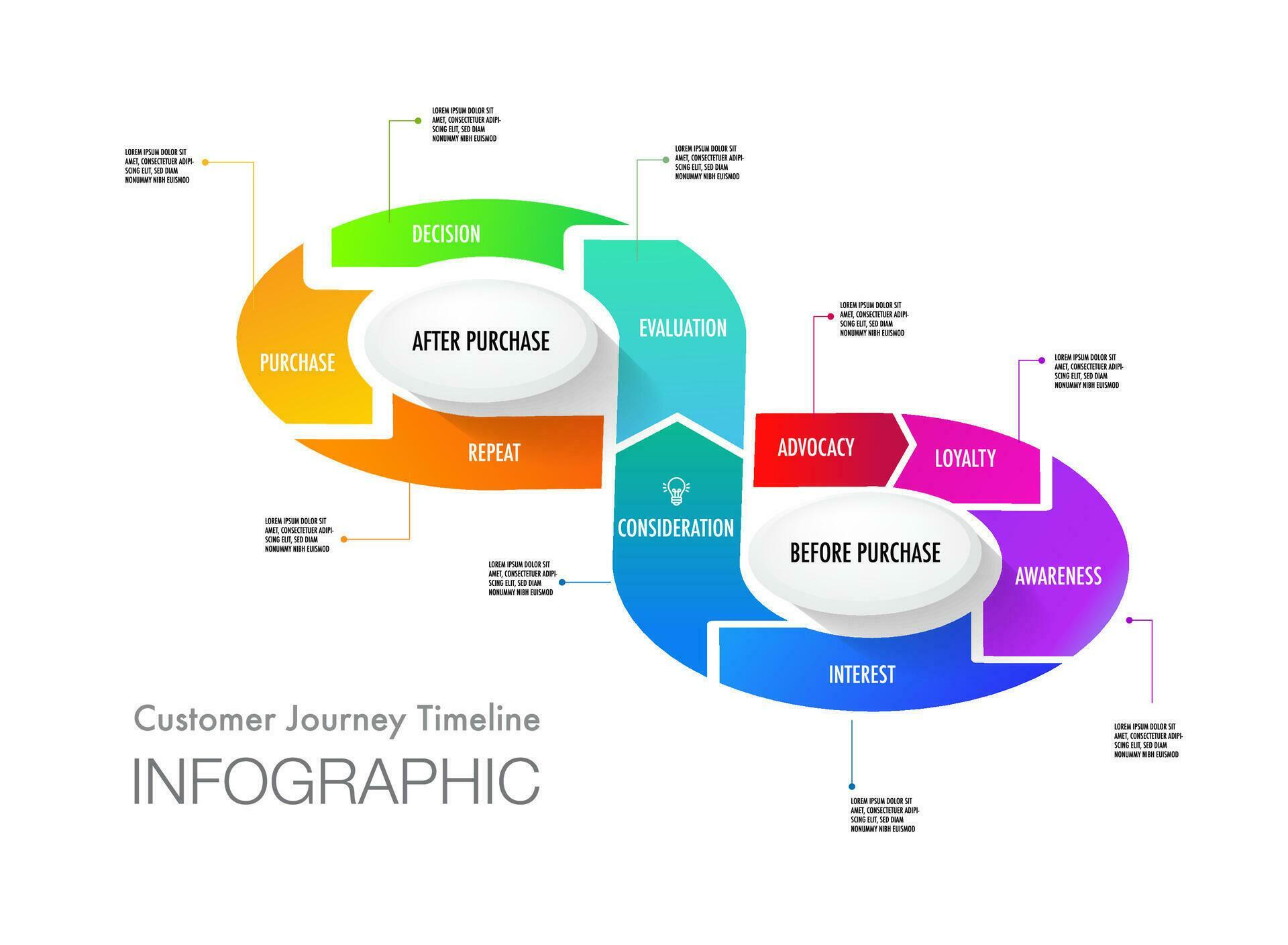

If this is your idea of a customer journey, I'm going to guess you have about 2 years of experience where you were shown an infinity loop on day one and then tasked to spend 2 weeks studying consumers within the context of this loop...and then another 2 weeks...and then another 2 weeks...for a total of 52 cycles. So, you have 2 weeks of experience 52 times.

You simply can't take action on this kind of stuff. Some of the concepts (advocacy) are company-centric, or are not journeys (repeat), and all of them must be studied in great detail, individually.

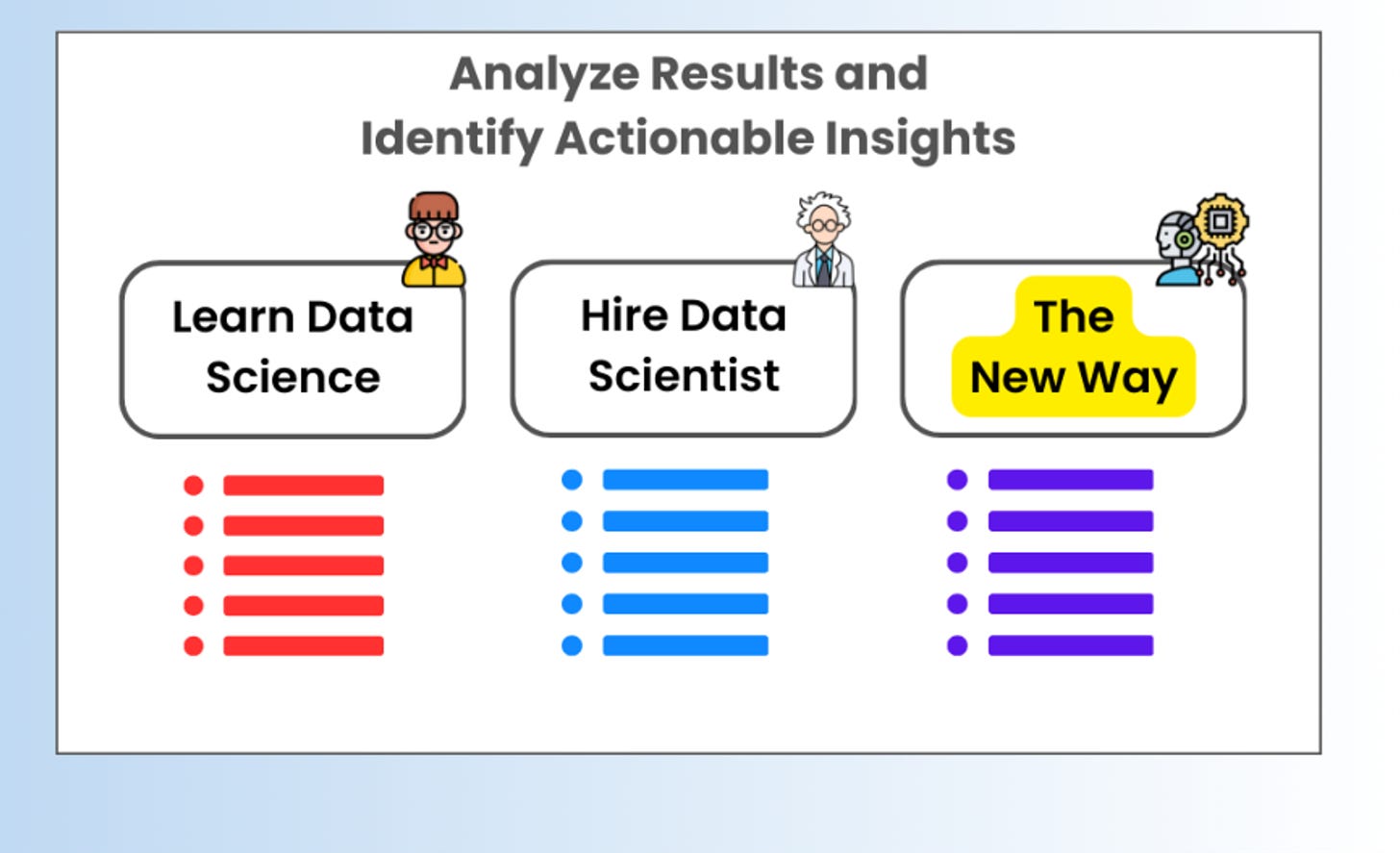

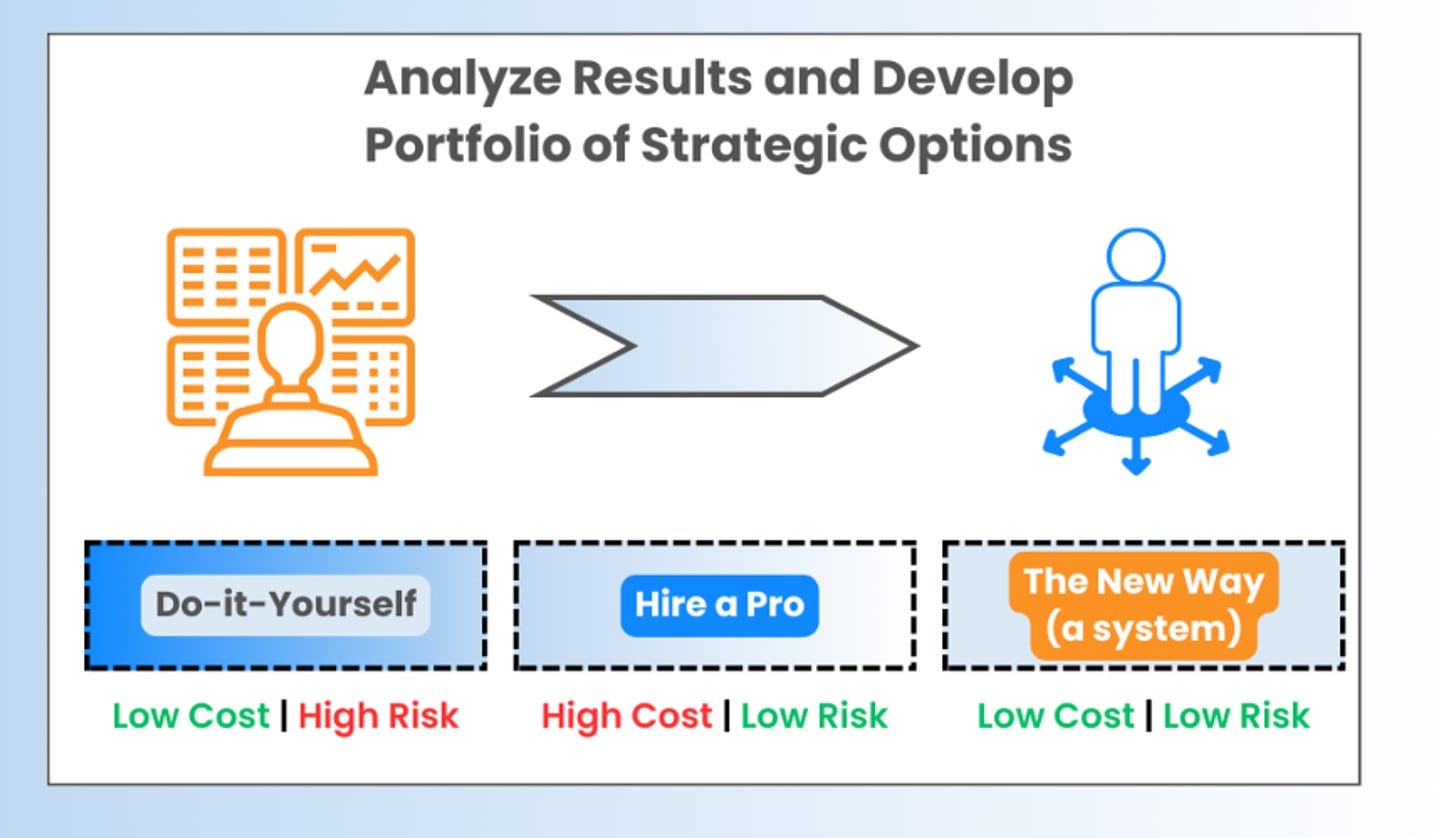

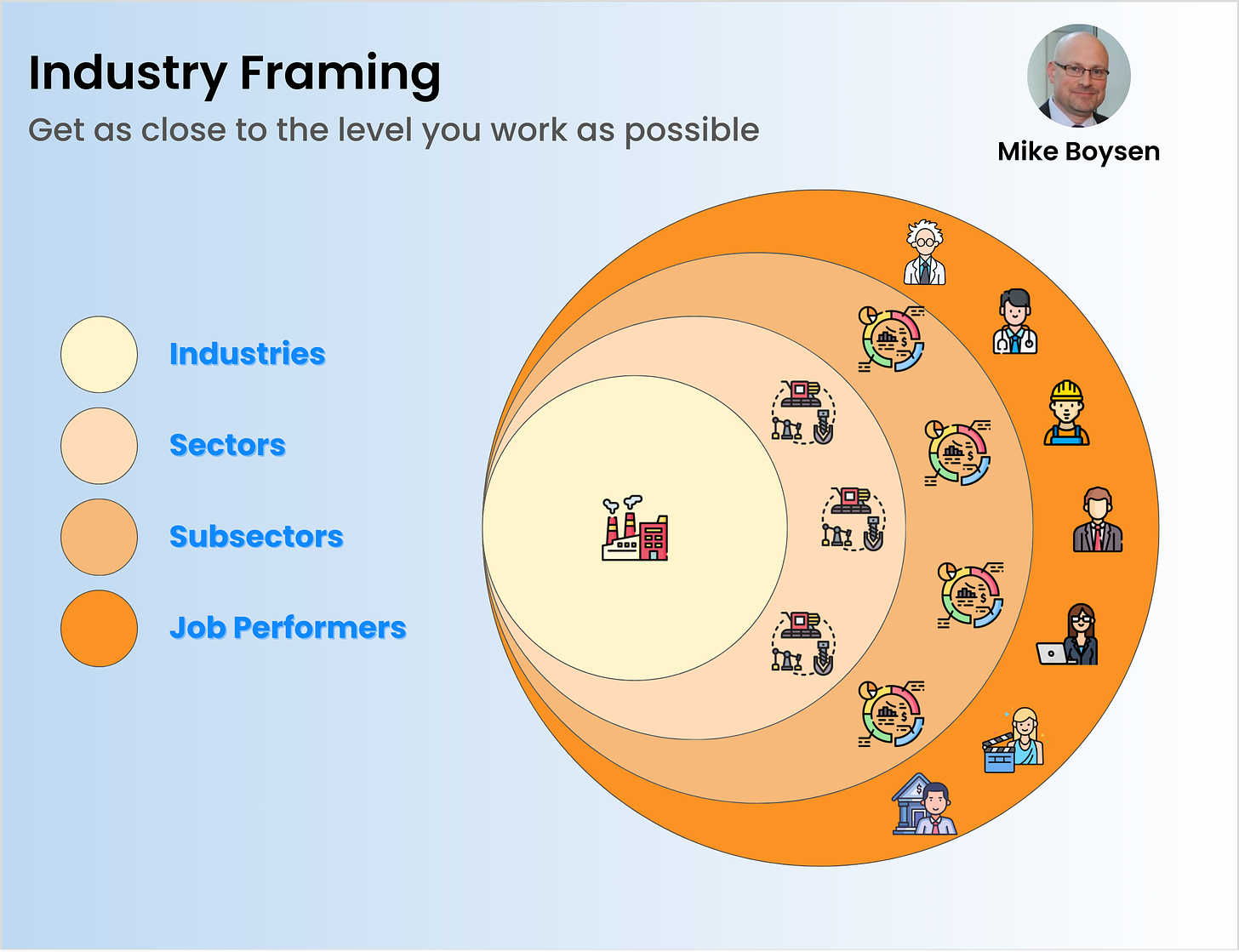

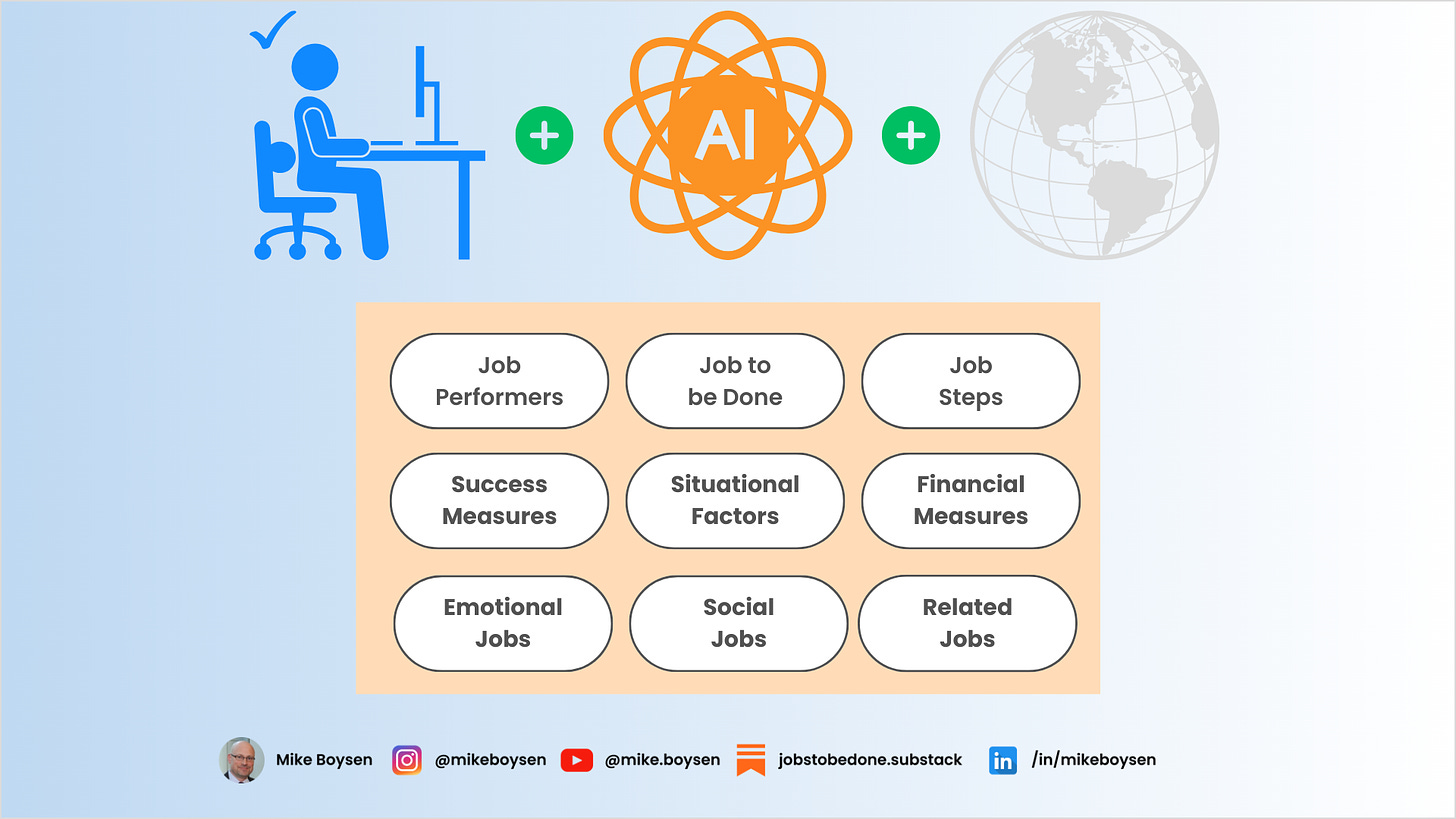

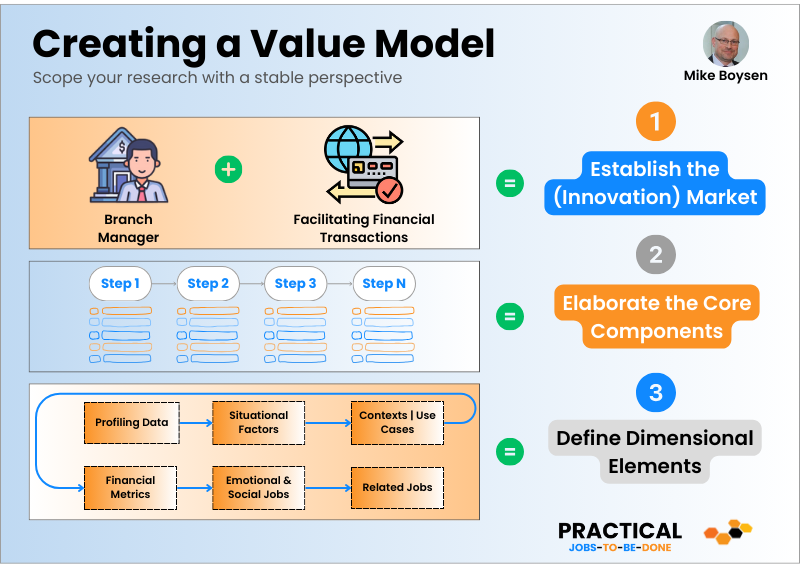

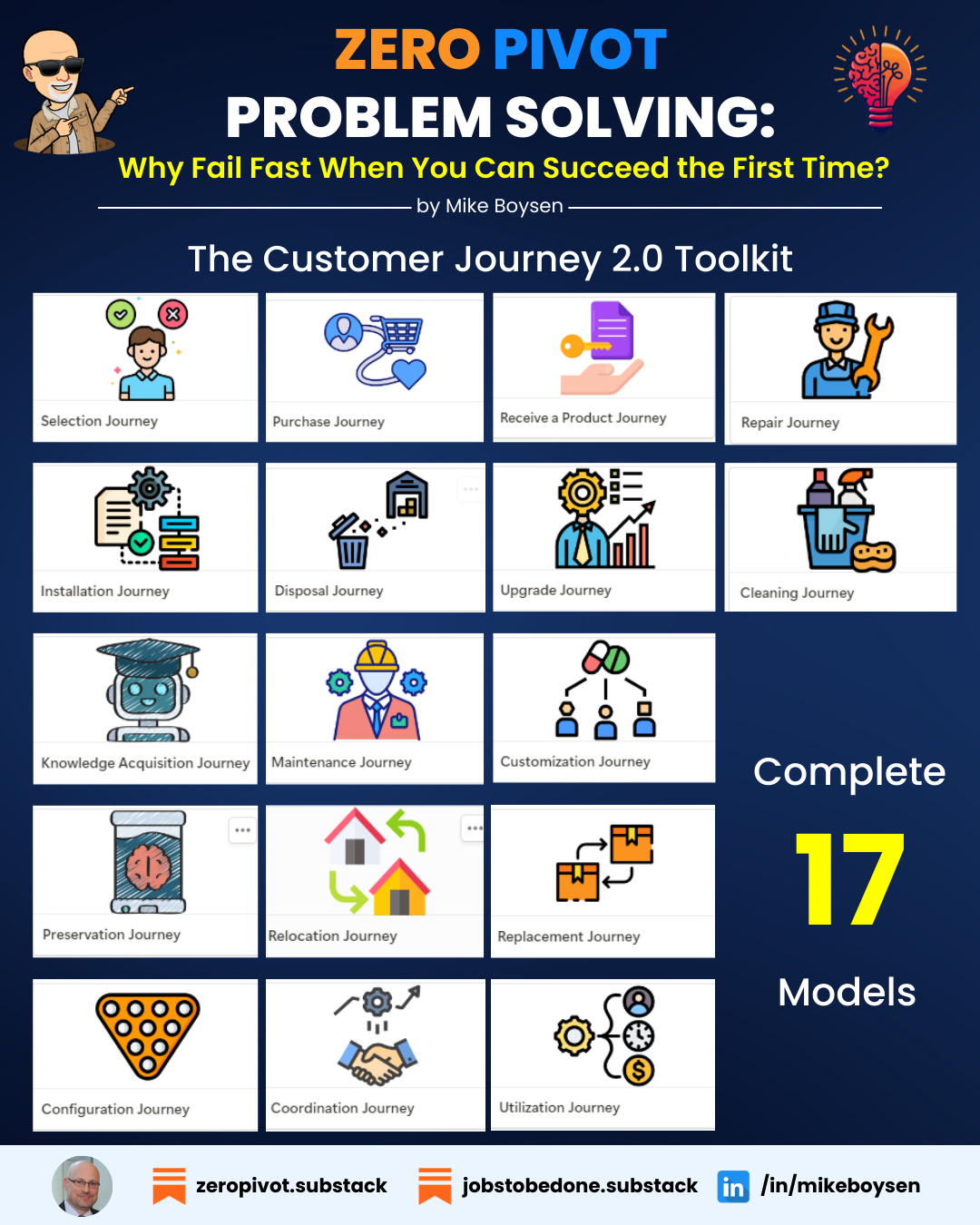

There is a much better and more insightful approach that is so granular that it used to be out of the reach of traditionally trained insights researchers. In this new model you can get deep and broad, and aggregated up the chain all day long.

I actually have a base toolkit that goes so far beyond that simple diagram that I hate to give away for free, but here it is anyway: https://jtbd.one/data-driven-journeys

Don't tell anyone 🤫

I'm working on a fine-tuned way to use this with other complementary downstream methods, but if you want insights, this is the basis for that questions framework I mentioned earlier.

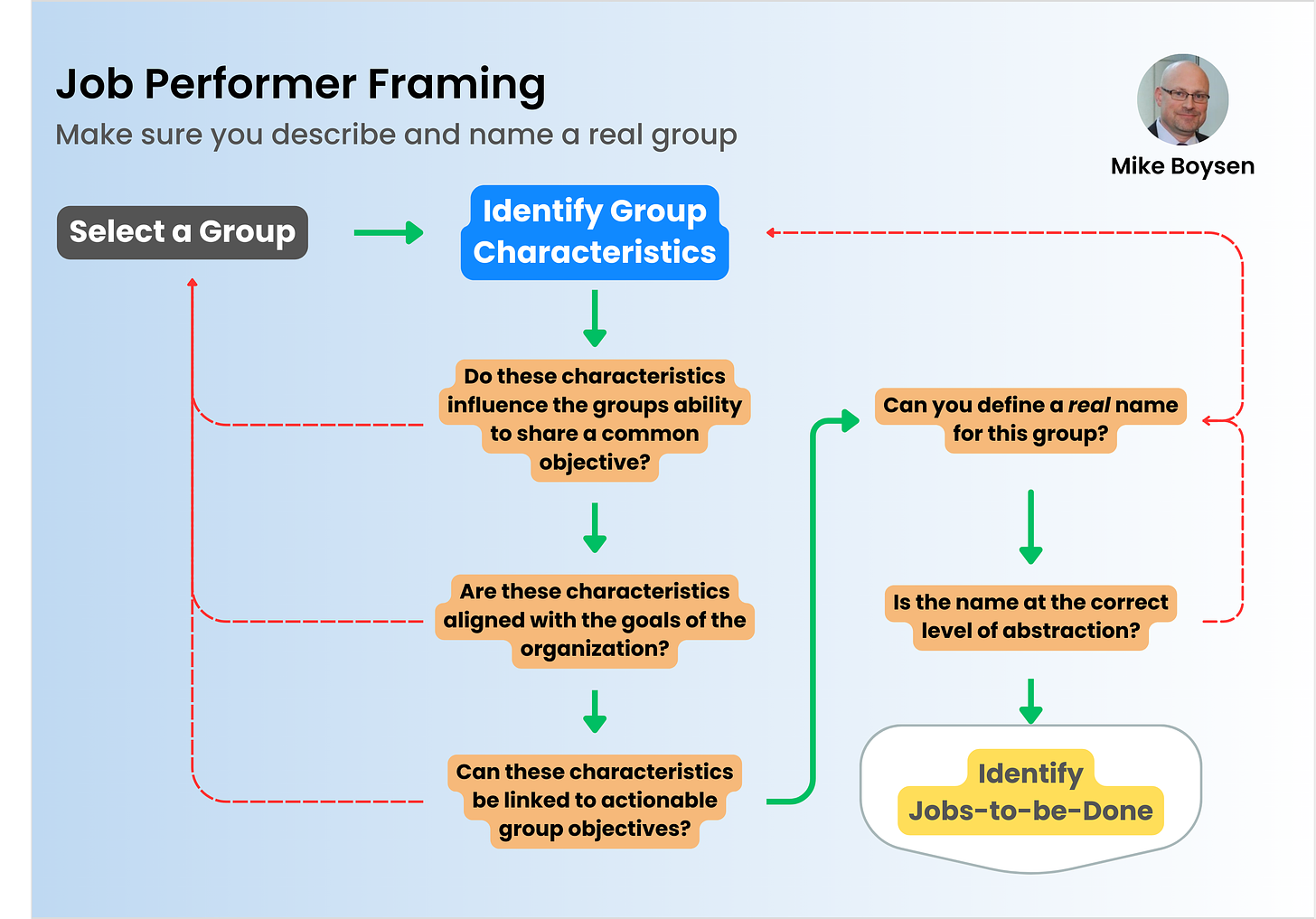

Never assume you understand customer needs

This is what it all boils down to. HiPPO culture assumes that they understand what customers need. Yet, these CX type projects fail at a high rate ... very much like products do. I'm not sure what the contribution level CX has to product failure, but they are two sides of the same coin.

Anyway, there are common reasons for failure that include lack of a strategic approach (thanks HiPPO), insufficient customer insights (low resolution lens), and inadequate technology integration (failure to collaborate across the organization).

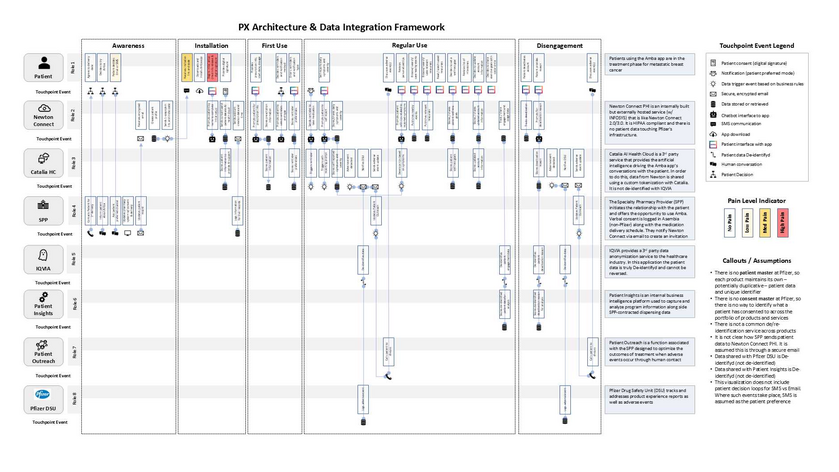

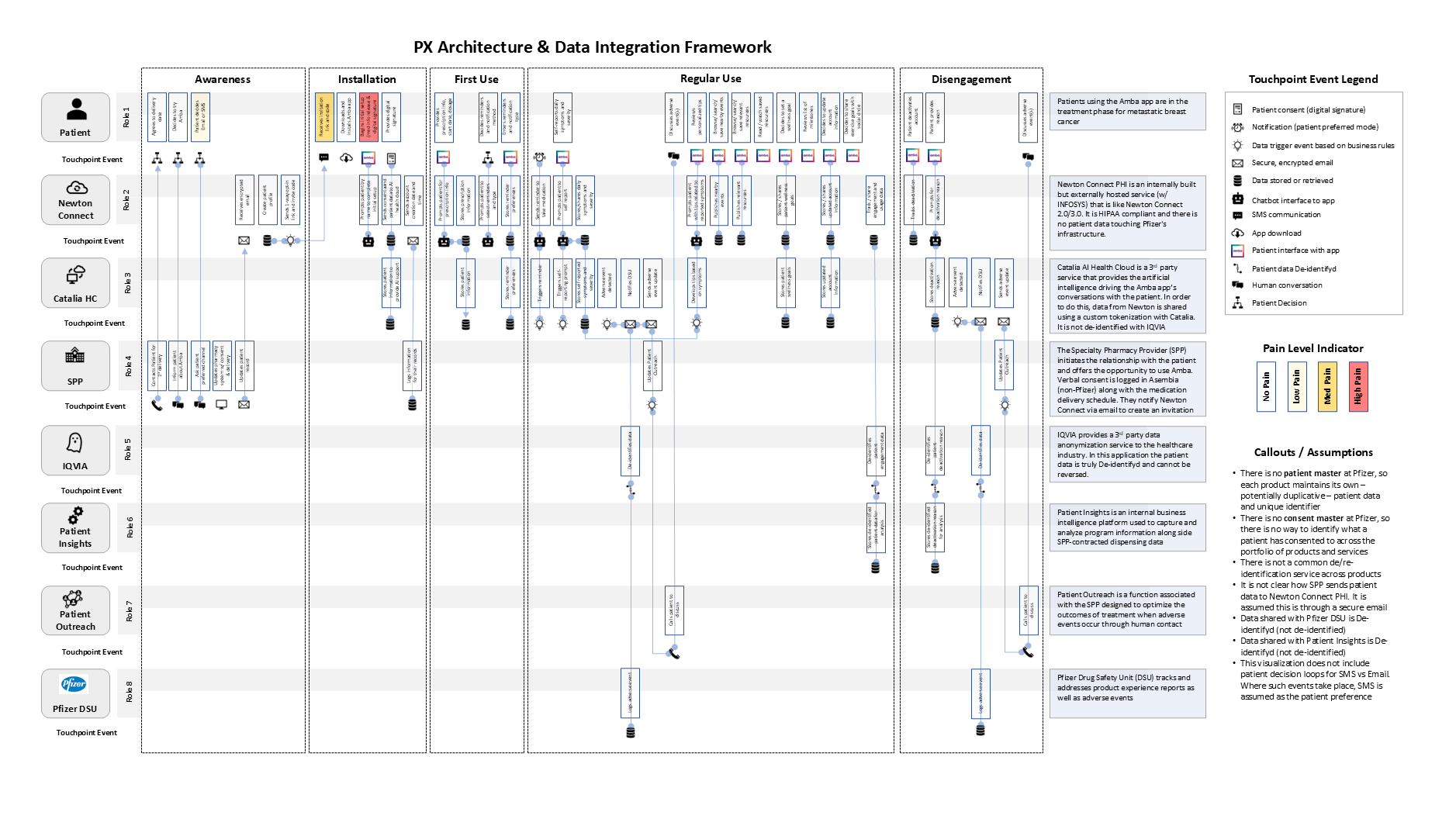

This 👇🏻 is not the data collection mechanism. It's a multi-layered service blueprint. You see that first row? That's what the CX team produced. It's represented in the Experience layer in the next image. Each row will have it's own drill down.

There are no customer needs depicted anywhere, though. Or, nothing that I would place a bet on. 🤑

The problem is that the experience layer has no real data whatsoever. Nor was anything measured. It's just pure workshop material. All text, all ideas, no hard prioritization or segmentation. My toolkit lays the foundation for that kind work.

I had to build the rest of the layers out (and pull it all together into a blueprint) with limited time and resources. But, it got done.

If I had done this all myself, we would have had an explicit representation of each segment (separate layers) and their underserved and overserved needs. In great depth and breadth.

By the way, each column in this masterpiece is actually a separate journey (some company-centric). Exploding that out would create completely different sets of these stacks. The tools now exist to do this in a complete new and more productive way.

There's no more excuses about customer needs, whether it's product and service needs, or experience and journey needs. I've got you covered if your interested. Let me know.

To sum things up:

Don't measure things you can't act on

Surveys should be quantitative

If you can't explain it, don't use it

Fear low resolution insights

Never assume, always measure

#cx #customerjourney #servicedesign #jtbd #innovation